EDA (Event-Driven Architecture)

- shweta1151

- Jun 4, 2025

- 8 min read

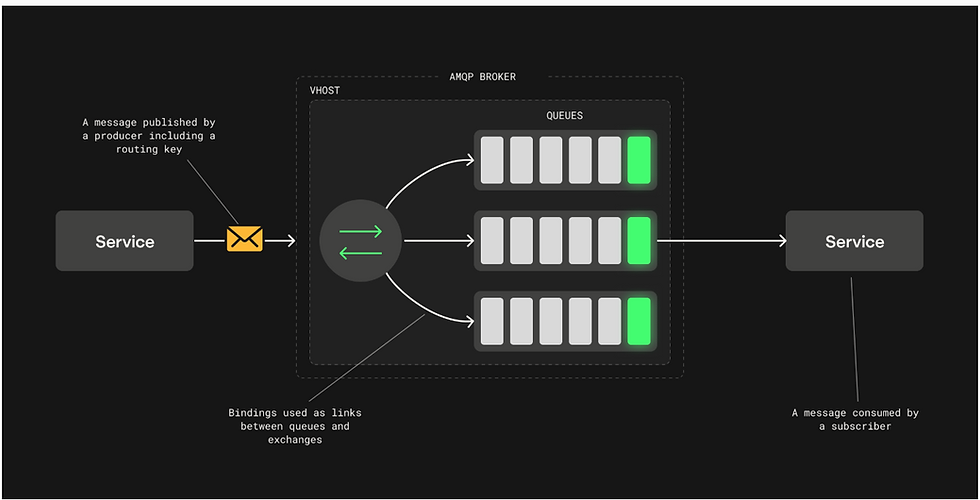

Architectural Style & Patterns: Event-Driven Architecture (EDA) is a design paradigm rather than a specific protocol. It involves systems that generate and respond to events – discrete occurrences (like “order placed”, “user signed up”, “sensor threshold exceeded”). In an EDA, components of the system communicate by emitting events and reacting to events, rather than directly calling each other via requests. This yields a highly decoupled, asynchronous architecture. Typically, EDA is implemented via messaging or streaming systems: for example, microservices might publish events to a message broker (like AMQP, Kafka, MQTT, etc.), and other services subscribe to those events to perform actions. EDA supports pub-sub and CQRS patterns, and often goes hand-in-hand with microservices: instead of services calling each other synchronously (tight coupling), they share information by events (loose coupling). EDA can also be used at an enterprise integration level – different applications broadcasting events on an event bus to integrate processes across a company. Key aspects of EDA include event producers (senders) and event consumers (listeners), and an intermediary (message broker, event bus, or log) that routes or stores these events. Architecturally, EDA enables event stream processing, where streams of events are processed in real time for analytics or immediate action. Modern data architectures talk about event streaming platforms (like Apache Kafka, AWS Kinesis) that treat events as first-class data to be stored and processed continuously. By 2025, EDA is widely recognised as critical for enabling real-time responsiveness and scalable decoupling – surveys show about 85% of organizations see significant business value in adopting EDA. However, only a smaller fraction (e.g., 13%) consider themselves mature in it, indicating many are still in early stages of implementation. This underscores that while EDA is appealing, it comes with complexity in design and tooling.

Strengths and Use Cases: Adopting an event-driven architecture can yield multiple benefits for large-scale or complex systems:

Loose Coupling and Independent Evolution - In an EDA, producers of events don’t know who (if anyone) will consume the events. This decouples the services – they can evolve, be deployed, or scaled independently as long as they stick to the event contract. Adding a new consumer of an event doesn’t require changing the producer. This flexibility is excellent for micro-services where you want each service to be as independent as possible. For example, an “Order Placed” event can be consumed by a billing service, a shipping service, and an analytics service, all without the order service needing to call each of those directly. New services can listen to that event in the future without touching the order service. This fosters agility and extensibility.

Real-Time Responsiveness - EDA enables systems to react to events as they occur, often in near real-time. This is crucial for scenarios like fraud detection (reacting to suspicious transaction patterns immediately), IoT monitoring (trigger alert when sensor data crosses threshold), or customer interactions (send a welcome email right after sign-up event). Instead of batch processing or polling, EDA pushes events through the system continuously. According to industry research, a majority of organisations recognise the importance of real-time data enabled by EDA. This real-time flow can improve user experiences (e.g., seeing updates instantly) and allow timely business decisions.

Scalability and Resilience - EDA, when backed by proper infrastructure, naturally scales. Event brokers or streams can handle high volumes by scaling out (e.g., Kafka can partition topics across servers). Services can consume events at their own pace, and if they are overwhelmed, the events can buffer in the broker. The asynchronous nature means a slow service doesn’t directly slow down the event producer – it decouples performance concerns. Also, if a consumer is down temporarily, events can be stored (depending on the system) until it comes back, adding resilience. In a synchronous architecture, if Service B is down, Service A might be blocked; in EDA, Service A just emits events and moves on, and Service B can catch up later (if using a log-based broker like Kafka). This improves overall system fault tolerance and elasticity.

Complex Event Processing and Patterns - EDA allows advanced patterns like event streaming analytics, windowing, aggregation, etc. Using streaming processing frameworks (Flink, Spark Streaming, Kafka Streams), businesses can derive insights or trigger actions on combined or temporal patterns of events. For example, detect that a user added items to cart but didn’t check out within 10 minutes and trigger a reminder – this kind of pattern is elegantly handled by event streams and processors. EDA is not just one event = one action, but can involve sophisticated event processing pipelines for deriving higher-level events or outcomes.

Better Data Flow and Integration - In enterprises, EDA can serve as a unifying mechanism for integrating disparate systems. Instead of point-to-point integrations (which get messy as systems increase), a central event bus can distribute information. For instance, an ERP system can publish an “Inventory Updated” event; many other systems (website, mobile app, procurement system) can consume that to update their state. It provides a single source of truth for events. Additionally, storing events (as in event sourcing or Kafka’s durable log) means a historical sequence of events is available for auditing or rebuilding state – which is useful in financial systems or any system where reconstructing state from events is desirable.

User Experience Improvements - On the frontend side, designing applications to be event-driven (with websockets or SSE pushing events to UI) leads to much more dynamic, collaborative, and responsive user experiences. While this is more the implementation via WS/SSE, the architectural mindset of thinking in events from backend to UI helps create live-updating interfaces and cross-system communication (like one user’s action immediately visible to another).

Real-World Success Examples - Many tech giants and modern systems attribute their scalability to EDA. For instance, LinkedIn (which created Kafka) processes an astonishing 7 trillion messages per day through its event pipeline – powering features like feeds, analytics, and more. Netflix uses an event-driven approach for parts of its infrastructure (such as monitoring systems triggering self-healing actions). Uber’s architecture for dispatch and tracking is highly event-driven. These examples show that at extreme scale, EDA is a proven approach.

Weaknesses and Limitations: Transitioning to or operating an event-driven architecture also introduces challenges:

Increased Complexity in Design and Debugging - EDA can make system behaviour harder to reason about. Instead of a straightforward call chain, you have events triggering multiple asynchronous processes. Tracing what happened for a single transaction can be difficult (though distributed tracing tools help by correlating events). Developers must think in an asynchronous mindset and ensure eventual consistency, which is a different paradigm from sequential calls. If something goes wrong (say an event consumer misbehaves), it may not be immediately obvious; there isn’t a simple stack trace. Debugging often involves checking logs across multiple services and event histories. In short, the loose coupling means the flow of logic is not explicit, which can confuse unless well-documented and monitored.

Eventual Consistency & Data Duplication - By decoupling components, you often accept eventual consistency – e.g., an order is placed, and the order service emits an event; the inventory and shipping services will update themselves “eventually” after getting the event. There is a time window where not all parts of the system have the up-to-date info. This is fine in many cases, but for some domains (like certain financial transactions) careful handling is needed. Also, services may maintain local copies of data (e.g., a materialized view) based on events, leading to duplicates of data across the system that must be kept in sync via events. Managing data consistency and handling out-of-order events or duplicates (if they occur) adds complexity.

Ordering and Transaction Semantics - In a distributed event system, guaranteeing the order of events as seen by all consumers can be challenging. Some brokers like Kafka keep order within a partition, but if events are partitioned for scalability, two related events might go to different partitions and be processed out of timestamp order in different consumers. If order matters (e.g., “account credited” then “account debited” must be processed in that order), architects need to design for it (maybe by keying events so they go to the same partition). There’s also the lack of global transactions – if an event triggers two actions, one could succeed and the other fail; compensating actions might be needed (the Saga pattern). Designing idempotent consumers (so reprocessing events is safe) and dealing with exactly-once semantics is tricky (some platforms give tools for this but not trivial).

Tooling and Skill Set - Adopting EDA often requires introducing new infrastructure (message brokers, event streams, stream processing engines) and learning how to use them. It’s not as familiar to all development teams as, say, building a REST API. Skilled engineers who understand distributed streaming, backpressure, and concurrency are needed. Operating these systems (Kafka clusters or RabbitMQ clusters, etc.) requires DevOps effort. Many organizations find they need to invest in platform engineering to provide event-stream as a service internally to make it easier. Without proper tooling, an EDA can devolve into an unmanageable tangle of events.

Overhead and Performance Considerations - If misapplied, EDA can introduce latency. For instance, a simple synchronous operation could become slower if split into multiple asynchronous steps due to event routing and queueing time. There’s also overhead in running brokers and persisting events. In high-throughput scenarios, event streaming platforms like Kafka handle it well, but using a less scalable broker or having very fine-grained events can cause bottlenecks. Essentially, it’s possible to over-architect with events – not every communication needs to be asynchronous, and making everything an event can sometimes complicate things unnecessarily. Finding the right balance of synchronous vs asynchronous is key.

Consistency of Event Schema and Versioning - As with any contract, event formats (schemas) need version management. With many producers and consumers, updating an event’s schema must be done carefully to not break consumers. You might need a schema registry or a strategy for deprecating old event versions. This is analogous to API versioning but can be more difficult as events flow invisibly through the system – you need governance to ensure all teams know what events exist and what their structure is (an event catalog of sorts).

Potential for Event Storms or Cascading Failures - If not controlled, events can generate more events and so on. There’s a risk of feedback loops or broadcasting too widely. Also, if one component goes into a rapid event firing loop (bug or surge), it could flood the system. Consumer services need to handle bursts or use backpressure, otherwise, a slow consumer’s queue might grow unbounded if producers are much faster (depending on broker behaviour). Monitoring and throttling are important to avoid one faulty component overwhelm others in an EDA.

Despite the challenges, the use cases for EDA are compelling: enterprise-wide integration, micro-services that must scale independently, systems requiring high throughput data ingestion and processing (like IoT sensor networks, where millions of events per second need to be processed – here something like Kafka in an EDA shines), and any scenario where real-time analytics or reaction is needed (e.g., monitoring systems that trigger self-healing, user behaviour tracking for personalisation in real-time, supply chain events reacting instantly to inventory changes). Many organisations pursue EDA to enable new capabilities like streaming analytics, AI/ML on real-time data, and improved customer experiences through responsiveness. Surveys confirm that an overwhelming majority of orgs consider EDA important to meet business needs, with 85% recognising its value. However, it’s a journey – incremental adoption, starting with key event flows, is common. In summary, EDA is about designing for asynchronicity, decoupling, and real-time flow of information, which can yield highly scalable and flexible systems at the cost of greater architecture and operational complexity.

Main Blog:

Comments